CPU Data Readback

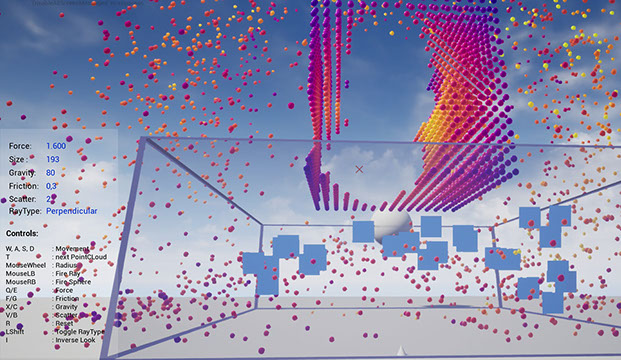

Too much data for CPU

The reason why you can render so many points completely interactive with the Point Cloud Kit is that all the data is calculated on the GPU within a specific material set on the PCA.

The drawback is that this makes it very difficult to create any gameplay mechanics out of it. For example a trigger volume where you want some points to be in. In most cases it is not important that this will be a “frame perfect” hit detection. For the gameplay it is enough if it gets triggered within ~1 second.

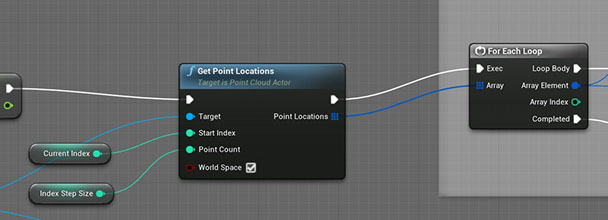

With version 1.1 (only on Unreal Engine 4.26 and 4.27) comes a new Blueprint function node: GetPointLocations

With this node you can read back a range of point locations, which will be returned in an Array of Linear Color (float4). With the flag WorldSpace all locations will be auto converted into World Space if necessary. The range can be set by a Start Index and a Point Count. This way you can step through the current Point Cloud over time. Maybe using 1000 points per step every 100ms.

Note that it will still take a while to iterate over really huge Point Clouds of +100k, so better use this mainly on smaller point counts. The next issue is, now where you have an array of ~1000 points, testing all those against some volume in one frame will eat up a lot of CPU time. And mostly you also want to do other things (AI, Gameplay, Physics) every frame.

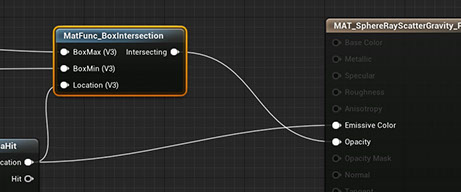

This is why the array contains Linear Color (float 4) values. The R, G, B channels are for the X, Y , Z location but in Alpha you can mark a Point within the Material.

Look at the Demo Scene Physics_Readback in PointCloudKit Content/Examples/Scenes.

The used PCA is PCA_SphereTwoRayScatterGravity_Physics_RB and this will use the Material: MAT_SphereRayScatterGravity_Physics_RB.

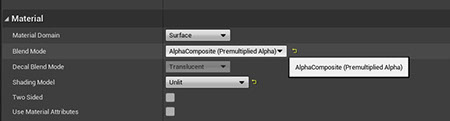

The Material BlendMode was set to AlphaComposite to be able to write a value (Range 0.0 - 1.0) into the alpha channel by using the Opacity output.

MatFunc_BoxIntersection will check if a Point is within a AABBox and write a 1.0 into Opacity. This is done on GPU! All you need to do is to test in Blueprint if “W” (alpha value) is 1.0, which is much more efficient than doing the intersection test in Blueprint (CPU).

AlphaComposite has some effects to be aware of. The Point Cloud needs to clear the internal Render Targets, for that make sure ClearRT is checked. Because of the math of that blend mode, usually the value passed into Opacity will actually be “1-x” when the CPU reads it out, but internally this is corrected (only for GetPointLocations though).

![]()

Within the demo scene Physics_Readback, points that fall down into the translucent box will get marked by DrawDebugPoint (just for demonstration and testing) in steps over time.